Publications

Theses

Conference Papers

Others

Theses

Abstract

Voices are ubiquitous and familiar, so much so that it is easy to forget how fundamentally important vocal signals really are to how we relate to others and to ourselves. Vocal experiences can take many forms (audible, tangible, silent, internal, external, neurological, remote, etc.) and offer great potential for bridging diverse fields. I am proposing a new approach for looking at the voice holistically, in its experiential nature, based on its propensity to connect. This dissertation introduces and examines methods for the creation of interactive voice-based experiences that foster novel and profound connections. I present three projects to support and illustrate this approach by establishing connections at three levels: individual, interpersonal, and extending beyond human languages. The Memory Music Box establishes a sense of connection across space and time, and is specially designed to encourage conversation and to enhance a sense of connectedness for older adults. With the Mumble Melody initiative, I extract musicality from everyday speech as a way to access inner voice processes and help people who stutter gain increased fluency. Finally, with the Sonic Enrichment at the Zoo project, I present ways to improve connections within and between species—including between humans and animals—by exploring sonic and vocal enrichment interventions at the San Diego Zoo. Each of these projects represents a different angle from which to consider the potential of the voice for creating new forms of connection. Such is the vision of this work. I consider the notion of connectedness

broadly, including the raising of personal self-awareness, the creation of strong interpersonal bonds, and the potential to create new forms of empathetic understanding with other species. Although this research focuses on the voice, it extends beyond this realm. The broader themes examined through this work have implications in the fields of neurology, cognitive sciences, assistive technologies, human-computer interactions, communication sciences, and rapport-building. Indeed, since the voice is a versatile projection of ourselves into the world, it offers a unique perspective for the study and enhancement of cognition, learning, personal development, and wellbeing.

Kleinberger R.

Singing about Singing: Using the Voice as a Tool for Self-Reflection

MS Thesis. Massachusetts Institute of Technology 2014 [paper]

Abstract

Our voice is an important part of our individuality. From the voice of others, we are able to understand a wealth of non-linguistic information, such as identity, social-cultural clues and emotional state. But the relationship we have with our own voice is less obvious. We don’t hear it the same way others do, and our brain treats it differently from any other sound we hear. Yet its sonority is highly linked to our body and mind, and is deeply connected with how we are perceived by society and how we see ourselves. This thesis defends the idea that experiences and situations that make us hear, see and touch our voice differently have the potential to help us learn about ourselves in new and creative ways. We present a novel approach for designing self-reflective experiences based on the voice. After defining the theoretical basis, we present four design projects that inform the development of a framework for Self-Reflective Vocal Experiences. The main objective of this work is to provide a new lens for people to look at their voice, and to help people gain introspection and reflection upon their mental and physical state. Beyond this first goal, the methods presented here also have extended applications in the everyday use of technology, such as personalization of media content, gaming and computer-mediated communication. The framework and devices built for this thesis can also find a use in subclinical treatment of depression, tool design for the deaf community, and the design of human-computer interfaces for speech disorder treatment and prosody acquisition.

Kleinberger R.

Musical virtual environment: an interactive machine vision tool for live performance

UCL VEIV program. Virtual Environment, Imaging and Visualisation 2012

Abstract

It is well known that non-musical communication between musicians is important during performances. Among all the meta-data produced in concert, gesturing (from eye contact to beat tapping to anticipative motions) is a privileged channel to convey intentions, enabling the ensemble to stay synchronous, and to adapt to the situation in real time. But keeping a constant eye on other musicians while focussing on one own play can be a perilous exercise. We believe that processing some of that information with an automatic visual system would improve the ensemble experience of performing. This project is about the optical capture and treatment of gestural information from an ensemble leader and its transmission to the other members of the ensemble.

Conference Papers

2021

Kleinberger R., Sands J., Sareen H., Baker J.M.,

TamagoPhone: Augmented incubator to maintain vocal interaction between bird parents and egg during artificial incubation.

VIHAR 2021: 3rd International Workshop on Vocal Interactivity in-and-between Humans, Animals and Robots, Paris, France 2021 [paper][demo video]

Abstract

It is common practice for zoos and bird conservationists to incubate bird eggs in artificial incubators to maximize hatching rates. In the wild or even in zoos within enclosures, eggs can be vulnerable to predators, diseases or temperature problems, and the use of artificial incubators during part or the entirety of the incubation time can greatly improve the chances of survival of the chicks and support preservation efforts for endangered species. However, some species exhibit important prenatal vocal interaction while within the egg. Indeed, parent birds often produce vocalisations directed to their eggs and in some species, chicks produce calls from within the egg a few days before hatching. Standard artificial incubation techniques deprive embryonic chicks of integral parent-offspring vocal communications during early development. Recent research has shed light on specific behavioral contexts associated with vocal pre-hatching events for specific species. Led by such research, there have been attempts of using curated static recordings during artificial incubation and hand-rearing to alleviate the lack of vocal interactions. However, our understanding of those vocal interactions is

still in its infancy, and might never be understood well enough to synthesize meaningful replacement or select relevant recordings. Instead, we propose supplementing existing egg incubation techniques with a two-way, real-time audio system to allow parent birds and unhatched eggs to communicate with each other remotely in real-time during incubation. To achieve this, we designed a framework and approach called TamagoPhone. With this approach, the real egg removed from the nest is replaced by an augmented “dummy” egg, embedding a microphone and speaker, which is then cared for by the parent. The artificial incubator is also augmented with microphones and speakers, in addition to the traditional temperature, humidity, and motion control systems. Both sides, parent and egg, are connected by a two-way audio streaming platform, with the audio components inconspicuously integrated.

2020

Kleinberger R., Harrington A., Yu L., van Troyer A., Su D., Baker J., Miller G.

Interspecies Interactions Mediated by Technology: an Avian

Case Study at the Zoo

CHI 2020: ACM CHI Conference on Human Factors in Computing Systems, Honolulu, USA, 2020 [paper][demo video]

Abstract

Enrichment is a methodology for caregivers to offer animals in managed care improved psychological and physiological well-being. Although many species rely on auditory senses, sonic enrichment is rarely implemented. Zoo soundscapes are generally dominated by human-generated noises, and do not respond systematically or meaningfully to animals’ behavior. Designing interactive sonic enrichment systems for animals presents unique ergonomic, ethical, and agency-related challenges. We deployed two novel interventions at XXX Zoo to allow S, a music-savvy hyacinth macaw, to control his sonic environment. Our results suggest that (1) the bird uses, understands, and benefits from the system, and (2) visitors play a major role in the bird’s engagement with this technology. Through his new agency, the bird effectively gained more control over his interactions with the public. The interaction became an interspecies experience mediated by technology. The resulting triangular interaction (animal-human-computer) may inform mediated interspecies experiences in the future.

2019

Kleinberger R., Stefanakis G., Ghosh S., Machover T., Erkkinen M.

Fluency Effects of Novel Acoustic Vocal Transformations in People Who Stutter: An Exploratory Behavioral Study

SNL 2019: Society for the Neurobiology of Language, Helsinki, Finland, August 2019 [paper][demo video]

Abstract

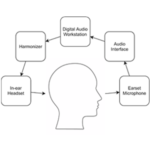

Persistent developmental stuttering is a complex motor-speech disturbance characterized by dysfluent speech and other secondary behaviors. Stuttering phenotypes and severity vary across and within individuals over time and are affected by the speaking context. In people who stutter, speech fluency can be improved by altering the auditory feedback associated with overt self-generated speech. This is accomplished by modulating the vocal acoustic signal and playing it back to the speaker in real-time. Most research to date has focused on simple delays and pitch shifts. New embedded systems, technologies, and software enable a re-evaluation and augmentation of the shifted feedback ideas. The current study seeks to explore alternative, novel modulations to the acoustic signal with the goal of improving fluency

Kleinberger R., Rieger A., Sands J. , Baker J.

Supporting Elder Connectedness through Cognitively Sustainable Design Interactions with the Memory Music Box.

UIST 2019: Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology, New Orleans, USA, October 2019 [paper][demo video]

Abstract

Isolation is one of the largest contributors to a lack of wellbeing, increased anxiety and loneliness in older adults. In collaboration with elders in living facilities, we designed the Memory Music Box; a low-threshold platform to increase connectedness. The HCI community has contributed notable research in support of elders through monitoring, tracking and memory augmentation. Despite the Information and Communication Technologies field (ICT) advances in providing new opportunities for connection, challenges in accessibility increase the gap between elders and their loved ones. We approach this challenge by embedding a familiar form factor with innovative applications, performing design evaluations with our key target group to incorporate multi-iteration learnings. These findings culminate in a novel design that facilitates elders in crossing technology and communication barriers. Based on these findings, we discuss how future inclusive technologies for the older adults’ can balance ease of use, subtlety and elements of Cognitively Sustainable Design.

Kleinberger R., Baker J., Miller G.

Initial Observation of Human-Bird Vocal Interactions in a Zoological Setting

VIHAR 2019: 2nd Intl. Workshop on Vocal Interaction in-and-between Humans, Animals and Robots, Queen Mary University, London, UK, Aug 2019 [paper]

Abstract

Vocal interactions between humans and non-human animals are pervasive, but studies are often limited to communication within species. Here, we conducted a pilot exploration of vocal interactions between visitors to the San Diego Zoo Safari Park and Sampson, an 18-year-old male Hyacinth Macaw residing near the entrance. Over the course of one hour, 82 vocal and behavioral events were recorded, and various relationships between human and bird behavior were noted. Analyses of this type, applied to large datasets with assistance from artificial intelligence, could be used to better understand the impacts, positive or negative, of human visitors on animals in managed care.

Kleinberger R., Stefanakis G., Franjou S.

Speech Companions: Evaluationg the Effects of Musically Modulated Auditory Feedback on the Voice.

ICAD 2019: The 25th International Conference on Auditory Display, UK, Northumbria University, June 2019 [paper]

Abstract

Changing the way one hears one’s own voice, for instance by

adding delay or shifting the pitch in real-time, can alter vocal

qualities such as speed, pitch contour, or articulation. We created

new types of auditory feedback called Speech Companions that

generate live musical accompaniment to the spoken voice. Our

system generates harmonized chorus effects layered on top of the

speaker’s voice that change chord at each pseudo-beat detected in

the spoken voice. The harmonization variations follow predetermined chord progressions. For the purpose of this study we generated two versions: one following a major chord progression and

the other one following a minor chord progression. We conducted

an evaluation of the effects of the feedback on speakers and we

present initial findings assessing how different musical modulations might potentially affect the emotions and mental state of the

speaker as well as semantic content of speech, and musical vocal

parameters.

Van Troyer A, Kleinberger R.

From Mondrian to Modular Synth: Rendering NIME using Generative Adversarial Networks

NIME 2019: New Interface for Musical Expression, Porto Alegre, Brazil, June 2019 [paper][demo video]

Abstract

This paper explores the potential of image-to-image translation techniques in aiding the design of new hardware-based musical interfaces such as MIDI keyboard, grid-based con- troller, drum machine, and analog modular synthesizers. We collected an extensive image database of such interfaces and implemented image-to-image translation techniques us- ing variants of Generative Adversarial Networks. The created models learn the mapping between input and output images using a training set of either paired or unpaired images. We qualitatively assess the visual outcomes based on three image-to-image translation models: reconstruct- ing interfaces from edge maps, and collection style transfers based on two image sets: visuals of mosaic tile patterns and geometric abstract two-dimensional arts. This paper aims to demonstrate that synthesizing interface layouts based on image-to-image translation techniques can yield insights for researchers, musicians, music technology industrial designers, and the broader NIME community.

Kleinberger R., Huburn J., Grayson M. , Morrison C.

SNaSI: Social Navigation through Subtle Interactions with an AI agent.

RTD 2019: Research Through Design, Netherland, Delft, March 2019 [paper]

Abstract

Technology advances have set the stage for intelligent visual agents, with many initial applica- tions being created for people who are blind or have low vision. While most focus on spatial navigation, recent literature suggests that supporting social navigation could be particularly powerful by provid- ing appropriate cues that allow blind and low vision people to enter into and sustain social interaction. › A particularly poignant design challenge to enable social navigation is managing agent interaction in a way that augments rather than disturbs social in- teraction. Usage of existing agent-like technologies have surfaced some of the difficulties in this regard. In particular, it is difficult to talk to a person when an agent is speaking to them. It is also difficult to speak with someone fiddling with a device to manip- ulate their agent. In this paper we present SNaSI, a wearable designed to provoke the thinking process around how we support social navigation through subtle interaction. Specifically, we are interested to generate thinking about the triangular relationship between a blind user, an communication partner and the system containing an AI agent. We explore how notions of subtlety, but not invisibility, can en- able this triadic relationship. SNaSI builds upon previous research on sensory substitution and the work of Bach-y-Rita (Bach-y-Rita 2003) but explores those ideas in the form of a social instrument.

2018

Kleinberger R.

Vocal Musical Expression with a Tactile Resonating Device and its Psychophysiological Effects

NIME 2018: New Interface for Musical Expression, Blacksburg, VA, USA, June 2018 [paper]

Abstract

This paper presents an experiment to investigate how new types of vocal practices can affect psychophysiological activity. We know that health can influence the voice, but can a certain use of the voice influence health through modification of mental and physical state? This study took place in the setting of the Vocal Vibrations installation. For the experiment, participants engage in a multisensory vocal exercise with a limited set of guidance to obtain a wide spectrum of vocal performances across participants. We compare characteristics of those vocal practices to the participant’s heart rate, breathing rate, electrodermal activity and mental states. We obtained significant results suggesting that it might be possible to correlate psychophysiological states with characteristics of the vocal practice if we also take into account biographical information.

Kleinberger R., Panjwani A.

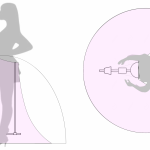

Digitally Enchanted Wear: a Novel Approach in the Field of Dresses as Dynamic Digital Displays.

TEI 2018: International Conference on Tangible, Embedded, and Embodied Interaction, Stockholm, Sweden, March 2018 [paper]

Abstract

We introduce the term Digital Dresses as Dynamic Displays as an emergent field in the domains of Wearable Computing and Embodied Interactions. This recent approach consists of turning clothing into visual – and sometimes audiovisual – displays to enable novel forms of interaction between the wearer, the viewer, the tangible clothing and the embedded content. In this context, we present Enchanted Wearable, a new optimized low cost approach to create Digital Dresses as Dynamic Displays. Enchanted Wearable is a technologically embellished and augmented garment containing a portable rear dome projection system that transforms the clothing fabric into a blank canvas displaying audiovisual content. With this system, we create a new form of expression through clothing to reflect identity, personality, emotions and inner states of the wearer. In this paper we first present the growing field of Digital Dresses as Dynamic Displays, then we survey and analyse existing prior art in this field using a specific list of characteristics: display technology, wearability, interactivity, brightness, context. Finally we present the design and technology behind our new Enchanted Wearable system and explain how it brings new perspectives to the field.

2017

Rieger A., Kleinberger R.

Learning from Lullabies: A CognitiveBehavioral Exploration of the Role of Lullabies in Infant and Adult Well-Being

GAPS 2017: Global Arts and Psychology Seminar, Tufts University, Somerville December 2017

Abstract

It is known that lullabies have soothed generations of our ancestors. According to Hellberg’s 2015 paper “Rhythm, Evolution, Neuroscience in Lullabies and Poetry” lullabies are traced to 2000BC, the first documented case etched upon a Babylonian clay tablet by a mother over 4000 years ago. Despite longstanding practices of lullabies, there is much to understand regarding their use and potentially beneficial applications in adult cognition and wellbeing.

In 2013, UCLA-ethnomusicologist Pettit published a study revealing “live lullabies slowed infant heart rate, improved sucking behaviors…critical for feeding, increased periods of “quiet alertness” and helped the babies sleep.” Those with child-rearing experiences are aware of how babies are comforted by the slow, sing-song vocal tones and oft unsettling lyrics. Despite recorded benefits, few take these drowsy, mournful songs beyond the nursery. In Neurologist Tim Griffiths’ research, he explores how the limbic system’s emotional response to lullabies decreases arousal levels thereby spurring pain attenuation.

Our research begins by exploring current music therapies and moves to examine functional applications of reintroducing the lullaby to positively affect hormonal, stress and cognitive levels. Furthermore, we examine how the act of singing a lullaby is beneficial to both singer and listener. The slow tempo encourages reduction of rapid heartbeat aiding in stress reduction. Moreover, as Pettit (2013) and Loewy (2013) hypothesize, the frightening stories in lullabies often reflect a parent’s worst fears, ones curbed by the catharsis of verbalization. According to Abou-Saleh, Et Al. (1998) lower prolactin levels were associated with postpartum depression in new mothers; levels that could be treated by lullabies. Studies by Huron (2011)/ Sachs (2015) reveal melancholy music triggers the endocrine neurons in the hypothalamus which tricks the brain into a compensatory release of the hormone prolactin, key in grief attenuation and self-comfort. We then propose an intervention that encourages subjects to sing variances of lullabies to themselves directly following a stressful activity, while monitoring signs of stress through tracking 1) the autonomic nervous system using wristbands capable of detecting: sympathetic and parasympathetic activation and changes in heart rate. 2) EEG stress tracking in prefrontal regions (Fp1, Fp2 and Fpz). Control groups participate in similar activities such as controlled breathing and listening to recorded lullabies (which lacks live participation and cathartic verbalization). Finally we raise associated challenges in examining specific sonic and verbal features responsible for stress reduction while accounting for aspects of cognitive individuality and neurodiversity. Our work asks: why do we stop singing and listening to lullabies once we get beyond the nursery? It is proposed that through our societal lens, we feel we do not need lullabies as we are older despite the fact that life tends to increase in difficulty past the cradle. We argue that lullabies are a rare example of a shared global phenomenon that is under-explored. We propose what happens if were to continue singing/listening to lullabies, how we might create and develop new lullaby-inspired vocal practices in form of a therapeutic cognitive-behavioral intervention in human adults.

2016

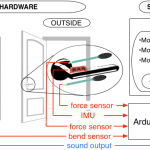

Kleinberger R., van Troyer A.

Dooremi: a Doorway to Music

NIME 2016: Conference on New Interfaces for Musical Expression,Griffith University, Brisbane, Australia, June 2016 [paper][demo video].

Abstract

The following paper documents the prototype of a musical door that interactively plays sounds, melodies, and sound textures when in use. We took the natural interactions people have with doors –– grabbing and turning the knob and pushing and puling motions –– and turned them into musical activities. The idea behind this project comes from the fact that the activity of using a door is almost always accompanied by a sound that is generally ignored by the user. We believe that this sound can be considered musically rich and expressive because each door has specific sound characteristics and each person makes it sound slightly different. By augmenting the door to create an unexpected sound, this project encourages us to listen to our daily lives with a musician’s critical ear, and reminds us of the musicality of our everyday activities.

Xiao Xiao, Haddad D. D., Sanchez T., van Troyer A., Kleinberger R., Webb P., Paradiso J., Machover T., Ishii H.

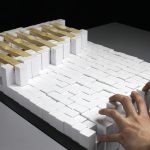

KinéPhone: Exploring the Musical Potential of an Actuated Pin-Based Shape Display

NIME 2016: 16th International Conference on New Interfaces for Musical Expression, Griffith University, Brisbane, Australia, 2016 [paper]

Abstract

This paper explores how an actuated pin-based shape display may serve as a platform on which to build musical instruments and controllers. We designed and prototyped three new instruments that use the shape display not only as an input device, but also as a source of acoustic sound. These cover a range of interaction paradigms to generate ambient textures, polyrhythms, and melodies. This paper first presents existing work from which we drew interactions and metaphors for our designs. We then introduce each of our instruments and the back-end software we used to prototype them. Finally, we offer reflections on some central themes of NIME, including the relationship between musician and machine.

J. Paradiso, C. Schmandt, K. Vega, C. Kao, R. Kleinberger, X. Liu, J. Qi, A. Roseway, A. Yetisen, J. Steimle, M. Weigel

Underware: Aesthetic, Expressive, and Functional On-Skin Technologies

ACM UbiComp/ISWC 2016: workshop [paper]

Abstract

Emerging technologies allow for novel classes of interactive wearable devices that can be worn directly on skin, nails and hair. This one-day workshop explores, discusses and envisions the future of these on-skin technologies. The workshop addresses three important themes: aesthetic design to investigate the combination of interactive technology with personalized fashion elements and beauty products, expressive and multi-modal interactions for mobile scenarios, and technical function, including novel fabrication methods, technologies and their applications. The goal of this workshop is to bring together researchers and practitioners from diverse disciplines to rethink the boundaries of technology on the body and to generate an agenda for future research and technology.

2015

Kleinberger R., Dublon G., Machover T., Paradiso J.

PHOX Ears: Parabolic, Head-mounted, Orientable, eXtrasensory Listening Device

NIME 2015: 15th International Conference on New Interfaces for Musical Expression, Baton Rouge, LA, June 2015 [paper]

Abstract

The Electronic Fox Ears helmet is a listening device that changes its wearer’s experience of hearing. A pair of head-mounted, independently articulated parabolic microphones and built-in bone conduction transducers allow the wearer to sharply direct their attention to faraway sound sources. Joysticks in each hand control the orientations of the microphones, which are mounted on servo gimbals for precise targeting. Paired with a mobile device, the helmet can function as a specialized, wearable field recording platform. Field recording and ambient sound have long been a part of electronic music; our device extends these practices by drawing on a tradition of wearable technologies and prosthetic art that blur the boundaries of human perception.

Kleinberger R.

V3: an Interactive Real-Time Visualization of Vocal Vibrations

SIGGRAPH 2015, Los Angeles Convention Center, CA, USA 2015 [paper]

Abstract

Our voice is an important part of our individuality but the relationship we have with our own voice is not obvious. We don’t hear it the same way others do, and our brain treats it differently from any other sound we hear. Yet its sonority is highly linked to our body and mind, and deeply connected with how we are perceived by society and how we see ourselves. The V3 system (Vocal Vibrations Visualization) offers a interactive visualization of vocal vibration patterns. We developed the hexauscultation mask, a head set sensor that measures bioacoustic signals from the voice at 6 points of the face and throat. Those signals are sent and processed to offer a real-time visualization of the relative vibration intensities at the 6 measured points. This system can be used in various situations such as vocal training, tool design for the deaf community, design of HCI for speech disorder treatment and prosody acquisition but also simply for personal vocal exploration.

2014

Holbrow C., Jessop E., and Kleinberger R.

Vocal Vibrations: A Multisensory Experience of the Voice

NIME 2014: 14th International Conference on New Interfaces for Musical Expression, Goldsmiths, University of London, UK, 2014 [paper]

Abstract

Vocal Vibrations is a new project by the Opera of the Future group at the MIT Media Lab that seeks to engage the public in thoughtful singing and vocalizing, while exploring the relationship between human physiology and the resonant vibrations of the voice. This paper describes the motivations, the technical implementation, and the experience design of the Vocal Vibrations public installation. This installation consists of a space for reflective listening to a vocal composition (the Chapel) and an interactive space for personal vocal exploration (the Cocoon). In the interactive experience, the participant also experiences a tangible exteriorization of his voice by holding the ORB, a handheld device that translates his voice and singing into tactile vibrations. This installation encourages visitors to explore the physicality and expressivity of their voices in a rich musical context.

Kory J. and Kleinberger R.

Social agent or machine? The framing of a robot affects people’s interactions and expressivity

HRI 2014: 2nd Workshop on Applications for Emotional Robots held in conjunction with the 9th ACM/IEEE International Conference on Human-Robot Interaction 2014

Abstract

During interpersonal interactions, humans naturally mimic one another’s behavior. Mimicry can be a measure of rapport or connectedness. Researchers have found that people will mimic robots, virtual avatars, and computers in social situations similar to those in which they mimic people. In this work, we explored how people’s perceptions of a robot during a social interaction influenced their expressivity and social behavior. Specifically, we examined whether the presentation or framing of a robot as a social agent versus as a machine could influence people’s behavior and responses, independent of any changes in the robot itself. Participants engaged in a ten-minute interaction with a social robot. For half the participants, the experimenter introduced the robot in a social way; for the other half, the robot was introduced as a machine. Our results showed that framing did have an effect: people who perceived the robot more socially spoke significantly more and were more vocally expressive than participants who perceived the robot as a machine. This study provides insight into how the context of a human-robot interaction can influence people’s reactions independent of the robot itself.

2013

Kleinberger R.

PAMDI Music Box Primarily Analog-Mechanical, Digitally Iterated Music Box

NIME 2013: 13th International Conference on New Interfaces for Musical Expression (NIME) Daejeon + Seoul, Korea Republic 2013 [paper]

Abstract

PAMDI is an electromechanical music controller based on an expansion of the common metal music boxes. Our system enables an augmentation of a music box by adding different musical channels triggered and parameterized by natural gestures during the “performance”. All the channels are generated from the original melody recorded once at the start. We made a platform composed of a metallic structure supporting sensors that will be triggered by different natural and intentional gestures. The values we measure are processed by an arduino system that sends the results by serial communication to a Max/MSP patch for signal treatment and modification. We will explain how our embedded instrument aims to bring to the player a certain awareness of the mapping and the potential musical freedom of the very specific – and not that much automatic – instrument that is a music box. We will also address how our design tackles the different questions of mapping, ergonomics and expressiveness and how we are choosing the controller modalities and the parameters to be sensed.

Gold NE., Sandu OE., Palliyaguru PN., Dannenberg RB., Jin Z., Robertson A., Stark A., Kleinberger R.

Human-Computer Music Performance: From Synchronized Accompaniment to Musical Partner

SMC 2013: Sound and Music Computing Conference Stockholm, Sweden 2013 [paper]

Abstract

Live music performance with computers has motivated many research projects in science, engineering, and the arts. In spite of decades of work, it is surprising that there is not more technology for, and a better understanding of the computer as music performer. We review the development of techniques for live music performance and outline our efforts to establish a new direction, Human-Computer Music Performance (HCMP), as a framework for a variety of coordinated studies. Our work in this area spans performance analysis, synchronization techniques, and interactive performance systems. Our goal is to enable musicians to incorporate computers into performances easily and effectively through a better understanding of requirements, new techniques, and practical, performance-worthy implementations. We conclude with directions for future work.

2012

Jacques C., Calian D., Amati C., Kleinberger R., Steed A., Kautz J., Weyrich T.

3D-printing of non-assembly, articulated models

SIGGRAPH Asia, Singapore 2012 [paper]

Abstract

Additive manufacturing (3D printing) is commonly used to produce physical models for a wide variety of applications, from archaeology to design. While static models are directly supported, it is desirable to also be able to print models with functional articulations, such as a hand with joints and knuckles, without the need for manual assembly of joint components. Apart from having to address limitations inherent to the printing process, this poses a particular challenge for articulated models that should be posable: to allow the model to hold a pose, joints need to exhibit internal friction to withstand gravity, without their parts fusing during 3D printing. This has not been possible with previous printable joint designs. In this paper, we propose a method for converting 3D models into printable, functional, non-assembly models with internal friction. To this end, we have designed an intuitive workflow that takes an appropriately rigged 3D model, automatically fits novel 3D-printable and posable joints, and provides an interface for specifying rotational constraints. We show a number of results for different articulated models, demonstrating the effectiveness of our method

Others

2016

Abstract

We introduce a design language to guide the theoretical and practical thinking around empathy and experience design. We start by identifying the lack of a comprehensive framework for empathy-focused design existing between and within the fields of design, cognition and human behavior. Based on these studies, we present a working definition for empathy, in order to facilitate understanding, discussing and designing for it. Further we propose a design language to guide the concept of designing for empathy. Finally, informed by this new design language, we present three stories around projects we have developed in an effort to provide the public with a curated, although open-ended, experience of empathy to improve the quality and depth of human interaction with the higher objective of advancing individual and societal well being

2011

Kleinberger R.

Nanometre 551

Science Fiction short novel published in a short story collection, “2084, le meilleur ou le pire des mondes”, broche, 2010. [text]

Abstract (in French)

Le quatrième verrou tiré, Guy Freitag poussa la poignée de l’entrée qui émit un grincement strident. Sa porte, comme toutes les portes, n’était pas destinée à être ouverte et fermée à tout va. C’était pourtant la troisième fois ce mois-ci que Guy devait sortir de son conapt. Ce matin, son alarme personnelle avait à nouveau retenti à 5h12, accompagnée d’un message du Ministère l’informant d’une « situation d’urgence requérant sa présence corporelle dans le bâtiment de la Direction Générale de la Protection de l’Information ». Il n’aurait jamais pensé que sa récente promotion le conduirait à tant de déplacements physiques. Il réagit pourtant sans délai à l’injonction sonore en débutant son rituel matinal avec 78 minutes d’avance. Après le passage au shampoing sec et le déshirsutage manuel, il détacha deux tablettes de Soylent Grine « spécial petit déjeuner » et les avala avec un demi verre d’eau chaude députrifiée. Il enfila sa combinaison de fonction anti-R et s’employa à dé- verrouiller l’entrée. Il ne lui fallut cette fois-ci qu’un quart d’heure pour atteindre la sortie du bâtiment car il enjambait maintenant avec plus d’agilité les corps endormis qui envahissaient l’escalier jusqu’au hall d’entrée